by Sam Kwok

INTRODUCTION

Artificial intelligence (AI) has the power to change lives, companies, and the world. While interest in AI has recently exploded, the field has traditionally been a specialist domain. This article aims to make the field of AI approachable to a broader audience.

Venture capitalists look for value-creating technologies that can define the next era of innovation. The potential of AI to impact the world has been compared to that of the Internet or mobile computing. This article summarizes the key concepts and issues surrounding AI and explains its importance to humanity.

ECONOMIC IMPACT OF ARTIFICIAL INTELLIGENCE

Technological progress is the primary driver of GDP per capita growth, increasing output faster than labor or capital[1]. However, the economic benefits of technological progress are not necessarily evenly distributed across society. For example, technological change in the 19th century increased the productivity of low-skilled workers relative to that of high-skilled workers. Highly skilled artisans who controlled and executed full production processes saw their occupations threatened by the rise of mass production technologies. Eventually, many highly skilled artisans were replaced by a combination of machines and low-skilled labor.

Throughout the late 20th century, technological change worked in a different direction. The introduction of personal computing and the Internet increased the relative productivity of high-skilled workers. Low-skilled occupations that were routine-intensive and focused on predictable, easily-programmable tasks—such as filing clerks, travel agents, and assembly line workers—were increasingly replaced by new technologies.

Today, precisely predicting which jobs will be most affected by AI is challenging. We do know that AI will produce smarter machines that can perform more sophisticated functions and erode some of the advantages that humans currently possess. In fact, AI systems now regularly outperform humans at certain specialized tasks. In a new study, economists and computer scientists trained an algorithm to predict whether defendants were a flight risk using data from hundreds of thousands of cases in New York City. When tested on over a hundred thousand more cases that it had not seen before, the algorithm proved better than judges at predicting what defendants would do after being released[2]. AI may eliminate some jobs, but it could also increase worker productivity and the demand for specific skills. All occupations, from low- to high-skilled, will be affected by the technological change brought about by AI.

WHY THE RECENT EXCITEMENT WITH ARTIFICIAL INTELLIGENCE?

Although AI research started in the 1950s, its effectiveness and progress have been most significant over the last decade, driven by three mutually reinforcing factors:

- The availability of big data from sources including businesses, wearable devices, social media, e-commerce, science, and government;

- Dramatically improved machine learning algorithms, which benefit from the sheer amount of available data;

- More powerful computers and cloud-based services, which make it possible to run these advanced machine learning algorithms.

Significant progress in algorithms, hardware, and big data technology, combined with the financial incentives to find new products, have also contributed to the AI technology renaissance.

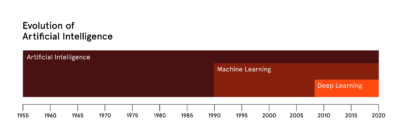

THE EVOLUTION OF ARTIFICIAL INTELLIGENCE

Artificial Intelligence

Artificial intelligence (AI) is an umbrella term for intelligence exhibited by machines or computer programs[3]. Simple AI programs that use rule-based logic to mimic primitive intelligence have existed since the 1950s. However, such programs were incapable of handling real-world complexity. Real-life conditions vary and are often unpredictable, and programmers could not possibly write code to handle every scenario.

Machine Learning

Machine learning (ML), a sub-field of AI, overcomes this limitation by shifting some of the burden from the programmer to the algorithm. Instead of basing the machine’s decision-making process on rules that are defined explicitly by a human, a machine learning algorithm identifies patterns and relationships in data that allow it to make predictions about data it has not seen before. Data is to machine learning what life is to human learning. Thus, for ML to work reliably, the technology requires continual access to massive volumes and a broad variety of data—referred to as big data[4].

ML practitioners develop their algorithms by using their data to train models. A model can be thought of as a black box (that is, we as the observer can use it without knowing about its internal workings) that ingests data and produces an output that represents a prediction.

Models cannot make good predictions without data on which to base them. For this reason, a practitioner begins with a historical data set and divides it into a training set and a test set. The training set is used to train the model and contains the inputs for the model and the real values of the metric that the model is designed to predict. This allows the model to essentially check its answers and learn from its mistakes to improve its future predictions. After the training is completed, the test set is used to assess the effectiveness of the trained model. The primary aim of ML is to create a model that can generalize and make good predictions on data it has not seen before, not just for data on which it has been trained.

Deep Learning

Some of the most impressive recent advancements in ML have been in the sub-field of Deep Learning (DL). DL is helpful to practitioners because it allows algorithms to automatically discover complex patterns and relationships in the input data that may be useful for forming predictions. DL automates the feature engineering—the process of learning the optimal features to create the best performing model. With “usual” ML techniques, the burden of feature engineering is often a tedious task that relies on human domain experts. DL overcomes this challenge by automatically detecting the most suitable features. In essence, deep learning algorithms learn how to learn. Given its breakthrough importance, it is valuable to understand the basics of deep learning. First, deep learning uses a structure called an artificial neural network .

WHAT IS AN ARTIFICIAL NEURAL NETWORK?

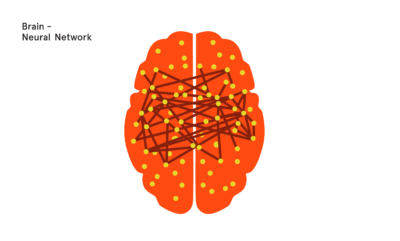

An Artificial Neural Network (ANN) is a computational model based loosely on the biological neural network of the human brain.

Although the mathematics of neural networking is highly sophisticated, the basic concepts of neural networks can be demonstrated without calculations.

Neural Networks Basics

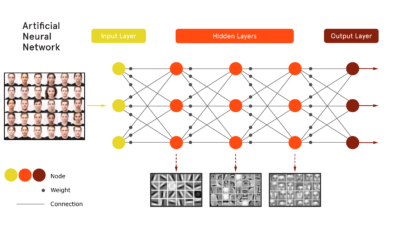

Neural networks are typically organized in layers composed of interconnected nodes that contain an activation function. Data is presented to the network via the input layer, which connects to one or more hidden layers where the actual processing is done by altering the weights of each connection. The hidden layers also connect to an output layer, as shown below.

Most ANNs contain a learning rule that modifies the connection weights based on the input patterns that it was presented. More simply, artificial neural networks learn by example, similar to biological neural networks. Human brains learn to do complex things, such as recognizing objects, not by processing exhaustive rules but through experience, practice, and feedback. For instance, a child experiences the world (sees a dog), makes predictions (“Dog!”), and receives feedback (“Yes!”). Without being given a set of rules, the child learns through training.

A neural network learns through training using an algorithm called backpropagation, which is an abbreviation for the backward propagation of errors. In backpropagation, learning occurs with each example through the forward propagation of output and the backwards propagation of weight adjustments. When a neural network receives an initial input pattern, it makes a random guess as to what that pattern might be. It then calculates the “error”—how far its answer was from the correct answer—and appropriately adjusts its connection weights to reduce this error.

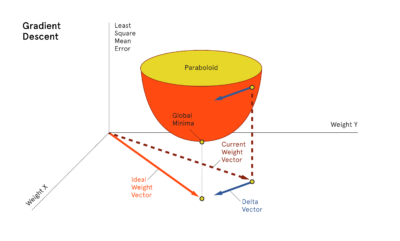

Backpropagation is used in conjunction with gradient descent, an optimization algorithm to find the global minimum of the error surface within the solution’s vector space. The global minimum is the theoretical solution with the lowest possible error. The error surface itself is a paraboloid, as shown below.

The speed of learning is the rate of convergence of the current solution and the global minimum (the theoretical lowest possible error).

Once a neural network is trained, it may be used to analyze new data. That is, the practitioner stops the training and allows the network to function in forward propagation mode only. The forward propagation output is the predicted model used to interpret and make sense of previously unknown input data.

OTHER MACHINE LEARNING ALGORITHMS

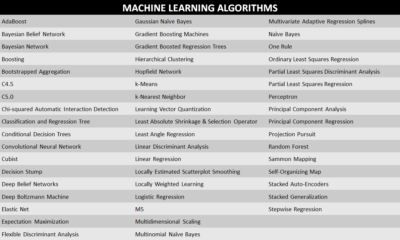

Neural networks are one of many algorithms used in machine learning. Additional machine learning algorithms are listed in the table below.

CONCLUSION

AI will continue to be a valuable tool for improving the world. Executives, entrepreneurs, governments, and investors who take informed risks on AI technologies will be rewarded. By sponsoring basic AI literacy and working together to develop AI’s positive aspects, we can ensure that everyone can participate in building an AI-enhanced society and realizing its benefits.

AI entrepreneur? Get in touch.

Notes

[1] Galor, O., & Tsiddon, D. (1997). Technological Progress, Mobility, and Economic Growth. The American Economic Review, 87(3), 363-382. Retrieved from http://www.jstor.org/stable/2951350

[2] (Kleinberg, J., Lakkaraju, H., Leskovec, J., Ludwig, J., & Mullainathan, S. (n.d.). Human Decisions and Machine Predictions. The National Bureau of Economic Research). Retrieved from http://nber.org/papers/w23180

[3] The definition of AI includes the concepts of “specific” AI and “general” AI. Specific AI is non-sentient artificial intelligence that is focused on one narrow task or pattern recognition. In contrast, general AI is intelligence of a machine that could successfully perform any intellectual task that a human can. General AI is a goal of some AI research and a common topic in science fiction and future studies. All currently existing AI systems are specific AI.

[4] It is a nuanced point, but there are situations in machine learning where there is little to no benefit of accumulating a greater corpus of data beyond a certain threshold. In addition, these massive data sets can be so large that it is difficult to work with them without specialized software and hardware.

Republishing not permitted.